A very warm welcome to all the new subscribers. I’m thrilled to have you as readers and truly appreciate your feedback and support.

Turbo-charge greater giving through your website.

MAP International saw immediate results after switching to Fundraise Up.

4x more new donors in the first 3 months

21% increase in average gift size

68% lift in online donation revenue

Game changer? It was for me too!

In this week’s SPN:

Dissecting the Google Leak

RoAS v. Scale - exploring the right Budget equation to hit both

Jobs that took my fancy this week

Let’s dig in!

What Google Says vs. What's in the Docs

Recently, Google accidentally left the door open to its treasure trove of internal search ranking documents. The Google algorithm leaks (really, API leaks) revealed over 14,000 features and ranking signals used in their search engine.

Rather amazingly the leak was confirmed by Google - amazing because it provided pretty enlightening insights into specific metrics and ranking factors previously unknown, obfuscated, denied or misunderstood.

At first glance it underlined some obvious but handy reminders:

Google loves context. By analyzing metadata and contextual data around links (like nearby terms and font size), Google can better understand and rank your content. Think of it like giving Google a treasure map to your most valuable content.

User behavior matters. Engagement metrics such as clicks and interactions help Google determine which content is resonating with users. More engagement equals better rankings. It's like getting extra points for audience applause.

Fresh content is king. Regular updates keep your content relevant and engaging, which Google rewards with higher rankings. There’s value in dusting off those old blog posts.

Snippets are your front-line soldiers in search results. High-quality snippets that directly answer donor queries can boost click-through rates and drive more traffic to your site.

Being the local hero pays off. Enhancing localized relevance to your programs, for example, ensures your content hits home with local audiences, improving your local SEO performance.

4 Non-Obvious SEO Boosters Buried in the Leak Stood Out

1. Contextual Anchor Information: Improve the relevance of your anchor text by considering the surrounding terms. This helps Google understand the context better.

2. Entity and Metadata Annotations: Use detailed annotations to enhance the understanding of your content’s subject matter. It will improve relevance for specific queries.

3. Quality Features for Snippets: Optimize your snippets with quality features like answer scores and passage coverage to improve their prominence in search results.

4. Link Quality Metrics: Evaluate link quality based on relevance and importance using metrics like “locality” and “bucket”.

The Contradictions

Here’s the fun twist: sometimes what Google says publicly doesn’t quite match the internal documentation.

User Interaction Data: Publicly, Google downplays user interaction data but internally it’s clear this data helps refine rankings.

Contextual Information Around Links: Google publicly emphasizes high-quality backlinks but internally places significant importance on the contextual specifics.

Domain and Entity Scores: Google says it doesn’t use “Domain Authority” directly, but the documents show various scores related to entities and domains do play a role.

Quality Signals in Snippets: Google provides general guidance on snippet quality but internally uses specific scoring methods for snippet ranking.

Localized Relevance Signals: Google advises on local SEO practices but internally uses more nuanced signals for localized relevance.

Should these findings change the way you approach your SEO?

That depends on the tactics you’re using.

One big takeaway for me is that relevancy matters a lot. It’s likely that Google ignores links that don’t come from relevant pages, for example - so start here when concentrating your efforts and measuring your success.

If your link acquisition tactics are based on earning links with PR tactics from high-quality press publications, the main thing is to make sure your team are pitching relevant stories. Don’t assume that any link from a high authority publication will be rewarded.

It’s also a solid reason behind why I see PR-earned links having such a positive impact on organic search success. Amen to cross-org collaboration!

Jobs & Opps 🛠️

John Hopkins University: Executive Director of Principal Gifts ($143,500 - $251,200)

World Central Kitchen: Director, Individual Donor Relations

Climate Imperative Foundation: US Energy Initiative Director ($210,000 - $230,000)

Mercy Corps: VP Comms ($142,000 - $180,000)

The World Bank: Senior Digital Development Specialist, Digital Development

UNICEF: Sports Partnership Consultant

Obama Foundation: VP Comms ($192,945 - $241,205)

The Trevor Foundation: Senior Philanthropy Officer ($100,000 - $110,000)

Restoring Vision: VP, Global Communications & Marketing ($135,000 to $155,000)

The Michael J. Fox Foundation for Parkinson’s Research: CFO ($300,000 - $375,000)

RoAS v. Scale:

What’s the Right Budget Equation?

I may have butted heads with CFO’s on occasion. Usually it’s around budget planning season. Typically in June - planning the budget for the next fiscal year - and in October, planning an ad-hoc pool for EOY heavy-ups.

Do we drop the RoAS threshold and generate more money?

Or do we hold strong and risk losing out on some revenue?

Will these new donors convert into monthly in the new year, paying off in the long run?

What’s the hit on the cash flow?

The right answer to each of these questions is it depends. But I’ve learnt that doesn’t help much! So here’s the formula I’ve used to tackle budgeting (allocating a nominal $100 budget) for a performance-focused digital fundraising team driving mass-mid market dollars.

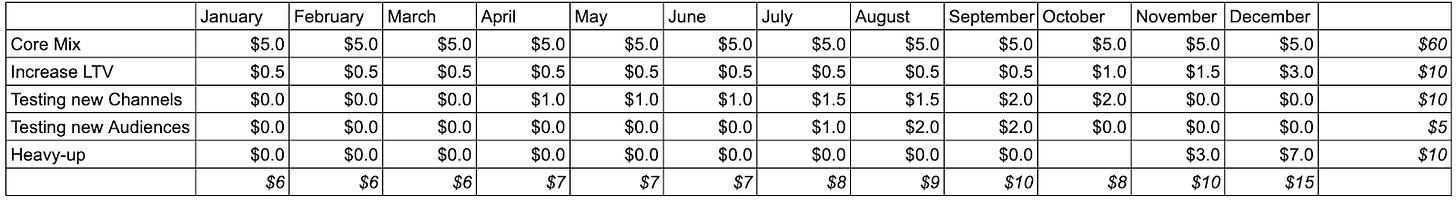

Tactical Breakdown

Reserve 10% - $10 – for heavy-ups. This untouchable reserve should be deployed only to double down (2x) on the best-performing tactics when the conditions are right – EOY heavy-up, or an unfortunate emergency if those affect your organization’s fundraising, or just a new channel from the test bucket that shows unprecedentedly good results.

Reserve another $10 for testing new channels. This is the bucket to try out a new Social Media platform, sign a contract with a Creator, or test out-of-home. The worst-case scenario is this part of the budget will drive a 0:1 return (I had that happen when taking over the YouTube homepage on Christmas Day a few years back – it still sounds like a great idea to me even now!). Even if that’s the case, the heavy-up reserve and results mentioned in Bullet 1 will compensate for any underperformance elsewhere.

Deploy $60 into the “core mix” of your campaigns. The core mix is what worked in the last year – all the tactics running until the last day of the budget cycle. Further break it down as follows:

Allocate $45 to prospecting tactics and seeking new donors from the tried-and-true audiences or slight deviations from them - usually $14 for programmatic / display advertising, $14 for paid social, $9 for non-branded paid search, $5 for video, and $3 for branded paid search.

Allocate $10 to lapsed donors’ reactivation, further broken down as $4 for display advertising, $4 for paid social, and $2 for video.

Allocate the last $5 to retargeting tactics for potential donors who hit the website after a prospecting click but drop off before their first donation.

Allocate $10 to retention and “increase the LTV” campaigns for existing active donors – mainly across Paid Social ($5), Display ($2), Video ($2), and Email ($1).

Allocate $5 to testing entirely new “controversial” audiences in best-performing prospecting channels. This bucket is different from the “test new channels” one in #2 – instead use this $5 to target audiences that are excluded from all your core mix campaigns.

All your donors support Political Party X? Spend these $5 targeting young supporters of Political Party Y.

Your Org supports a religious cause? Spend these $5 targeting self-identified atheists or members of a different faith.

The above examples can also be informed by donor surveys or persona studies, but the goal is to target new ones that haven’t been tried before.

The above breakdown consistently allowed me to improve performance year on year, reaching new revenue heights while still improving RoAS. Usually, it would play out as follows – assuming a flat budget YoY:

The “core mix” would improve performance by ~5%, reaching 63% of the previous year’s revenue at 60% of the spend as a function of constant tactical optimizations.

The same would happen with the LTV campaigns, driving 11% of revenue at 10% of spend.

The “test budget” would average out to driving the same performance as the core campaigns last year, driving about 10% of the revenue at 10% of spend.

The new, “controversial” audiences would underperform on average, driving about 2.5% of revenue at 5% of spend. Still, they would also highlight one or two groups with the potential for a double-down.

The heavy-up bucket, deployed towards a new audience or a new channel that worked exceptionally well in testing, or even a set of core tactics limited by budget, would over-perform, driving 20% of revenue at 10% of spend.

This formula consistently allowed me to achieve ~115% of last year’s revenue at 95% of spend.

And the last 5%? Spend it on new tools that can make your fundraising smarter. While tools adoption is harder to measure, it usually pays off at least 1:1 – bringing the total equation to 120% of last year’s revenue at the same spend and a myriad of new channel and audience insights to inform the next year’s targets.

The revenue can be further accelerated by reinvesting a portion of the extra gain and increasing the budget yearly while maintaining the breakdown above, putting the Org on a healthy trajectory of steady increase.

Monthly Breakdown Throughout the Year

Visualizing the formula, here’s how I sketch the annual budget breakdown using the same $100 ($95) total:

That’s all for today!

If you enjoyed this edition, please consider sharing with your network. Thank you to those that do. If a friend sent this to you, get the next edition of SPN by signing up below.

And huge thanks to this Quarter’s sponsor Fundraise Up for creating a new standard for online giving.

Now onto the interesting stuff!

Reads From My Week

An Anonymous Source Shared Thousands of Leaked Google Search API Documents with Me; Everyone in SEO Should See Them (Sparktoro Blog)

TikTok’s New AI Ad Offerings Are a Mixed Bag, Agency Execs Say (AdAge)

Forget ROAS, The New Retail Metrics Game (AdExchanger)

The Great AI Challenge: We Test Five Top Bots on Useful, Everyday Skills (WSJ)

The Trade Desk Ranks The Top 100 Publishers (AdWeek)

Creating Surround Sound Consumer Experiences (Aaron Goldman)

How Shein and Temu Snuck Up on Amazon (Big Technology)

Secrets From the Algorithm Google Search’s Internal Engineering Documentation Has Leaked (iPullRank)

How AI’s Energy Needs Conflict with Media Agencies’ Carbon Reduction Efforts (Digiday)